Pablo uses MakarenaLabs’ MuseBox to produce a neural network, which is then deployed onto an AMD (formerly Xilinx) FPGA. This becomes part of the chain taking data captured from the webcam in front of Pablo and the microphone, ending in the desired movements of the head, eyes, and mouth.

Why Pablo?

There are a lot of open-source projects for robotics, for instance, the animatronic DIY, but Pablo is much more than that.

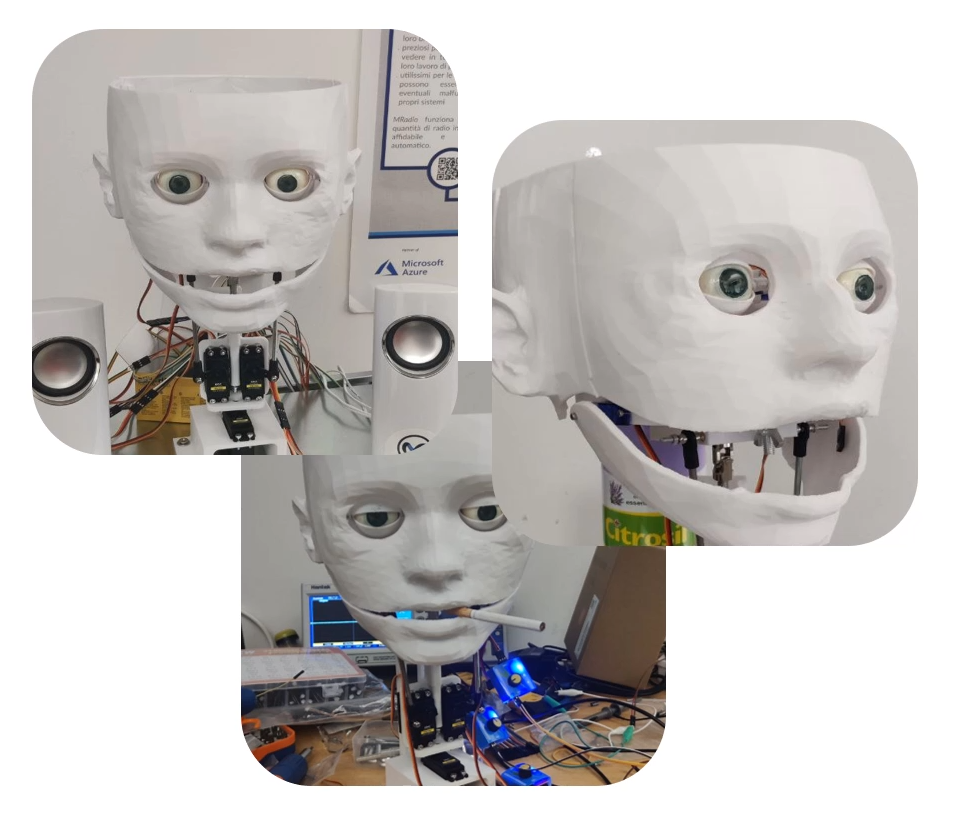

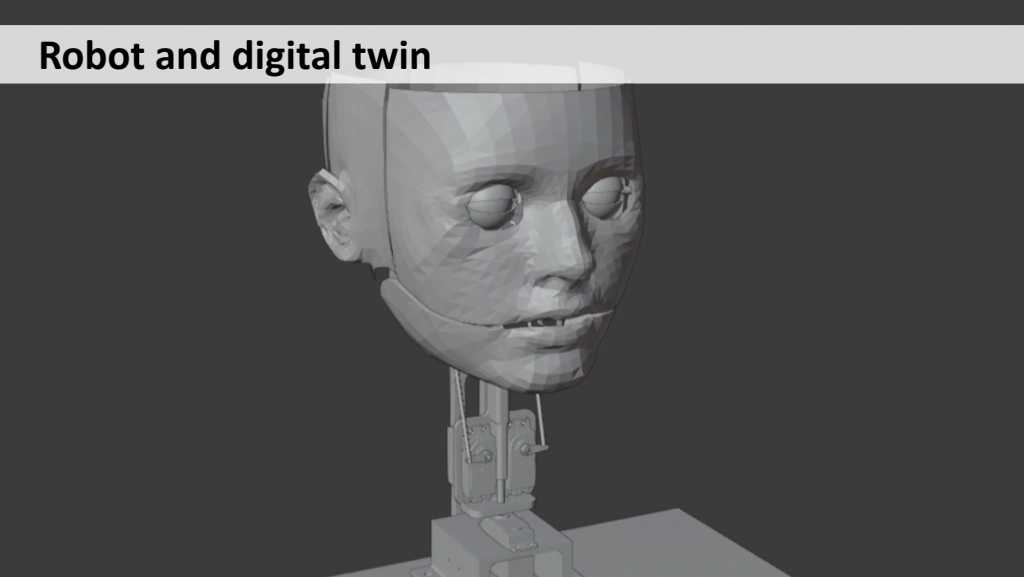

Pablo is a smart robot that computes a collection of AI models and FPGA IPs, but also is a fully customizable and reconfigurable humanoid robot. In addition, due to the fact that is 3D printed, is completely reproducible and customizable.

Pablo is useful for:

- an eye-catchy demo for the trade fairs

- a customizable human phantom for artificial intelligence tasks

- a digital twin analysis for human interaction

- the entertainment industry

- entertain the people

- medical and educational tasks also for people with mental problems

- for boring human tests (and Pablo never gets tired!)

Physical components

We mentioned that Pablo is a custom-made robot. Therefore it is necessary to detail the main physical components of Pablo, which are:

- 3D printed Face

- Resin printed Eyes

- 3D-printed joints and links to move the eyes and the head

- KR260 AMD Xilinx SOM FPGA

- PYNQ Z2 AMX Xilinx FPGA board for ADC converter and diagnostic

The Logical Components

It is necessary to detail the workload distribution on the board.

Therefore the Kria KR260 is responsible for running MuseBox’s AI tasks:

- Face Detection

- Face Recognition

- People Detection

- People Tracking

- Speech to Text

- Audio Filtering

- Audio Classification

To sum up, the overall management of the AI tasks is done via a publish/subscribe method, which is embedded with a pre-defined data model in the MuseBox product. Moreover, MuseBox allows the users to create their own AI applications (or using the existing ones) with very little effort, as it manages all the problems of scheduling and task management (which are generally quite problematic!).

Python / PYNQ API

Pablo’s software stack is based on the MuseBox API and PYNQ framework; in particular, the latter allows you to program and control the entire FPGA flow (motors and sensors control and Machine Learning task).

PYNQ allows you to use the FPGA with Python to control the head movements with high-level functions and different tasks.

Here below is a table of common APIs:

| Function name | Description | Parameters | Return |

|---|---|---|---|

| set_servo_angle(servo, angle): void | set angle of a specific servo motor | servo: the servo reference angle: the angle in degree (from 0 to 180 ) |

|

| send_audio(audio): void | send audio to FPGA (and play it on the speaker) | audio: the PCM audio source | |

| listen_audio(): PCM array | get PCM audio from FPGA | PCM array which represents the recorded audio | |

| face_detect(image): BoundingBox array | get Bounding Boxes of faces from an image | image: CV2 image | |

| face_recognition(image): string | get name of recognized face | image: CV2 image of a face (derived from a bounding box) | name of the recognized face |

| face_landmarking(image): Points array | get 98 landmarking points of the face | image: CV2 image of a face (derived from a bounding box) | 98 landmarking points |

| text_from_audio(audio): string | get string text from an audio | audio: the PCM audio source | the transcripted text |

eyelid_upeyelid_downeye_x_dxeye_y_dxeye_x_sxeye_y_sxjaw_sxjaw_dxneck_tilt_sxneck_tilt_dxneck_pan

set_servo_angle(neck_pan, 90) #reset

for i in range(70, 120):

set_servo_angle(neck_pan, i)

time.sleep(tt)

j = 120

for i in range(70, 120):

set_servo_angle(neck_pan, j)

time.sleep(tt)

j = j - 1

set_servo_angle(neck_pan, 90) #reset

Web API

If you want to deploy Pablo on a heterogeneous system, it is possible to use the web API.

Pablo has 2 main external communication channels:

- a classical HTTP client/server channel

- a ZMQ communication publish/subscribe channel;

In every case, Pablo accepts a JSON format request according to the Python API.

In HTTP APIs, Pablo is the server and an external system is the client; the endpoint is [Pablo IP]:8080/request.

In ZQM APIs, Pablo publishes at port 6969 and subscribes at port 6868. The subscriber IP will be defined in the configuration file stored in Pablo’s memory.

For example, if you want to move the neck to 90 degrees:

{

"function": "set_servo_angle",

"parameters": ["neck_pan", 90]

}

A piece of cake!

Related content

Release History

| ID | Name | Description | Components | ML Tasks | Date |

|---|---|---|---|---|---|

| Pablo | Pablo | A frontal face in a box that follows you with the eyes and says your name | static face, moving eyes, moving mouth, PYNQ Z2, KV260 | face detection, face recognition, text to speech | 10/05/2022 |

| Pablo-1 | Pablo Otto | complete face with neck, follows your head movements and says your name | moving face, moving eyes, moving neck, moving mouth, production KRIA SOM | face detection, face recognition, face landmarking, text to speech | 21/06/2022 |

| Pablo-2 | Pablo Otto Von Huber | mechanical and electronical improvements respect to the previous release | moving face, moving eyes, moving neck, moving mouth, production KRIA SOM | face detection, face recognition, face landmarking, text to speech | 04/10/2022 |

| Pablo-3 | Pablo Otto Von Huber Steiner | mechanical and electronical improvements respect to the previous release, can recognize some words | moving face, moving eyes, moving neck, moving mouth, production KRIA SOM | face detection, face recognition, face landmarking, text to speech, speech to text | 08/11/2022 |

| Pablo-4 | ??? | ||||

| Pablo-5 | ??? | ||||

| Pablo-6 | ??? | ||||

| Pablo-7 | ??? |