Musebox Certification From Xilinx

Introduction to Musebox Certification

Musebox Certification from Xilinx is here! But what Musebox is? It is a real-time machine learning system which runs on FPGAs using Deep Learning Processing Unit (DPU) from Xilinx.

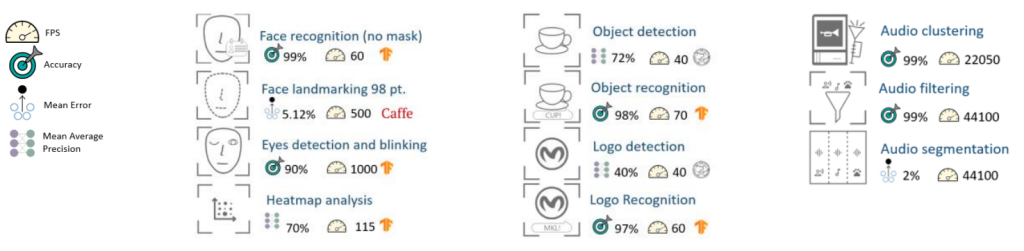

Musebox currently supports 5 different certification tasks:

- Face Analysis

- People Analysis

- Audio Analysis

- Object Analysis

- Medical Analysis

In detail, Musebox offers 24 pre-trained AI models to solve the 5 tasks previously detailed. Those AI models can be re-trained in order to solve slightly different tasks which customers may have. But is this sufficient to create a machine learning product? NO! Let us discover what MuseBox offers more with respect to other solutions and why Xilinx has certified it as a product.

AI Application Common Drawbacks

When developing AI applications, generically, the focus of the majority of the engineer is on the AI model. So, this means that the engineer puts its best efforts on selecting the dataset, choosing the best metric for the loss functions (if he uses Deep Learning) and other important steps to set up a robust training environment.

However, there is a common drawback in commercial AI applications, which is the surrounding environment of the AI model. Specifically, the input(s) and output(s) of the model and the scheduling of the models (if more than one is present in the application) are fundamental also, even if sometimes are not considered as important as developing a robust AI model.

To be clear, an AI application which does not have a robust pipeline (data acquisition, data pre-processing, AI Inference, data post-processing, model scheduling, concurrency management) is completely unusable even if the AI model is the most robust ever built.

Let us detail how MuseBox solves those problems.

MuseBox Certification Features

First of all let’s start from data acquisition. First of all the data acquisition consists in grubbing frames of audio and video from the sources. To collect and sort the frames Musebox uses a queue and a queue manager to manage the collected samples. A queue manager is necessary to avoid race conditions and managing multiple inputs.

Secondly, the pre-processing and post-processing stack is based on multiple frameworks which are buildable from the user depending on OS configuration.

As a plus MuseBox performs and manages the AI Inference using Vitis AI RunTime (VART) and Xilinx Intermediate Representation (XIR) framework. These dependencies assure the compatibility of MuseBox with every Xilinx board which supports a systolic array or a DPU.

Moreover Musebox supports different schemes of scheduling of the tasks, by wrapping the data stack processing and Vitis dependencies. This feature is essential when building complex applications with tenths of tasks.

In particular The protocols and frameworks support by MuseBox, for intranet and internet communications are:

- ZMQ

- WebSocket

- Socket.IO

- WordPress (as a plugin)

- PYNQ

- RabbitMQ

- ROS

- ROS2 (buildable with Kria Robotic Stack)

To conclude, MuseBox supports then all the features to work with a complete AI solution, from AI models, to data management passing through the tasks scheduling.

You can find all the documentation here:

https://www.xilinx.com/products/acceleration-solutions/1-1izohqy.html

https://www.xilinx.com/publications/solution-briefs/partner/musebox-solution-brief.pdf

Conclusion and Final Remarks on Musebox Certification

We have introduced in this article MuseBox and its features. MuseBox can be delivered as a final binary or as a set of API, and it supports different workloads from Edge AI to large Datacenter Inference.

Take the next step and try MuseBox out!

In conclusion, MakarenaLabs would like to thank Xilinx and all the people involved in the process of certifying MuseBox for the help and the kindness shown.

Do you want to use Python, MuseBox and FPGA altogether? Check out our latest article: PYNQ-DPU on CorazonAI

[…] MuseBox is the MakarenaLabs flagship product and we make it available to you a new on-premise Demo on the MuseBox site. We show seamless integration of AI applications simultaneously on several different Xilinx AMD hardware. From Ultra96 and Kria, U200 and U50 Alveo cards up to VCK5000. You can execute AI inference from your web browser wherever you are, on any of the AI models we made available, running them in real-time on the boards and getting performance results. […]